I Stopped Mastodon DDoSing Me (I Think)

I recently wrote about how Mastodon is DDoSing me every time I post a link to this site. I've managed to fix the problem...I think.

After writing the previous post, EchoFeed sucked it up and spat it out over on Mastodon. True to form, my site went down for a few minutes. Worse still, the community picked it up, and a number of other users with a decent following re-shared it, taking my site down over and over again. 😢

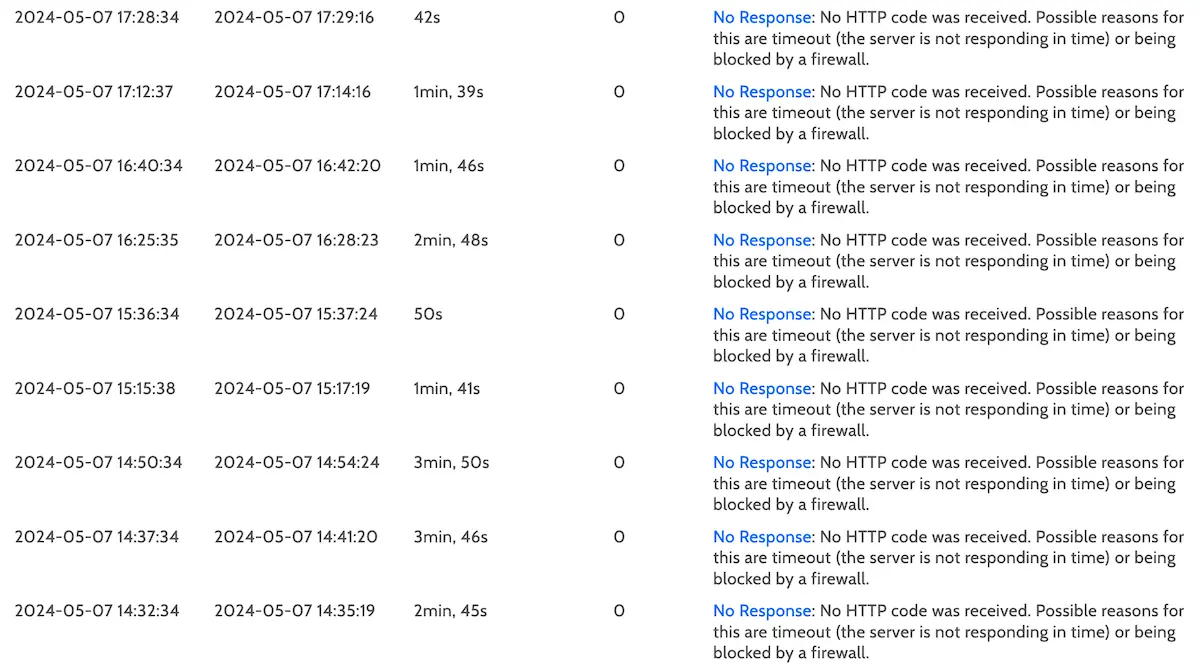

I keep an eye on a number of my sites with Downtime Monkey, and as you can see below, it recorded quite a few outages on the day I wrote the original post:

It’s not all bad though, as the community, in all it’s techie glory, stepped up and gave me a lots of advice and opinions. It was clear from my logs that the site wasn’t going down because the server was out of resources, so I had a feeling it was something I could work around. I just needed to make the time to do it.

So, over the last couple of evenings, I decided to dig in and troubleshoot the issue in the hope that I would come up with a solution.

What I tried

The overwhelming advice was to setup caching so that my pages didn’t need to be rendered with PHP on every load. I already knew this, and I already had setup caching on Kirby.

It seemed my caching solution wasn’t up to the task, so people were recommending Varnish cache (which I’ve used in the past to great effect), others recommended switching to a static site (not very helpful).

Then there was the nuclear option (thanks to Manu for the recommendation) to simply block the Mastodon user agent at the server level so instances won’t be able to pull the meta data. Links would still work, but cards wouldn’t. That’s fine, but I wanted to keep this as a last resort, especially since we’re looking at a server/site config issue here, rather than a resource issue.

Varnish seemed like the best bet, especially since I’d used it in the past. So I spun up a VPS on Digital Ocean and set to work installing and configuring Varnish.

Ten minutes later I had Varnish setup and I posted a link on Mastodon to my staging site. I had an SSH session running so I could monitor logs in relative real-time and I quickly saw all the requests come flooding in.

My staging site stayed up. 🎉

But I didn’t fancy paying for, and managing, an additional server just because of this issue. It felt a bit like smashing walnuts with a sledgehammer. So I next tried to setup Varnish on my existing server, via Docker. I managed to get this working too, but it was causing problems with my Kirby panel, and since this is the main way I produce content, it was a deal-breaker for me.

Then, someone from the Kirby team reached out on Mastodon and mentioned their Staticache plugin. I’d tried Staticache in the past, but it had caused problems on various parts of my site, with little gain over the standard caching, so I always abandoned it.

This time I decided to dig in and work through those issues. I set it all up, and things seemed to be going ok - there was no significant performance improvement however, and I wasn’t sure if it was all working correctly.

So, I decided to ask on the Kirby forums how one would know if Staticache is working, and it turns out it adds a little HTML comment to the end of the cached page, like this:

<!-- static 2024-05-09T09:07:09+00:00 -->

I checked my homepage, all good; I could see the HTML comment. But there didn’t seem to be any confirmation comment on my post pages. Weird.

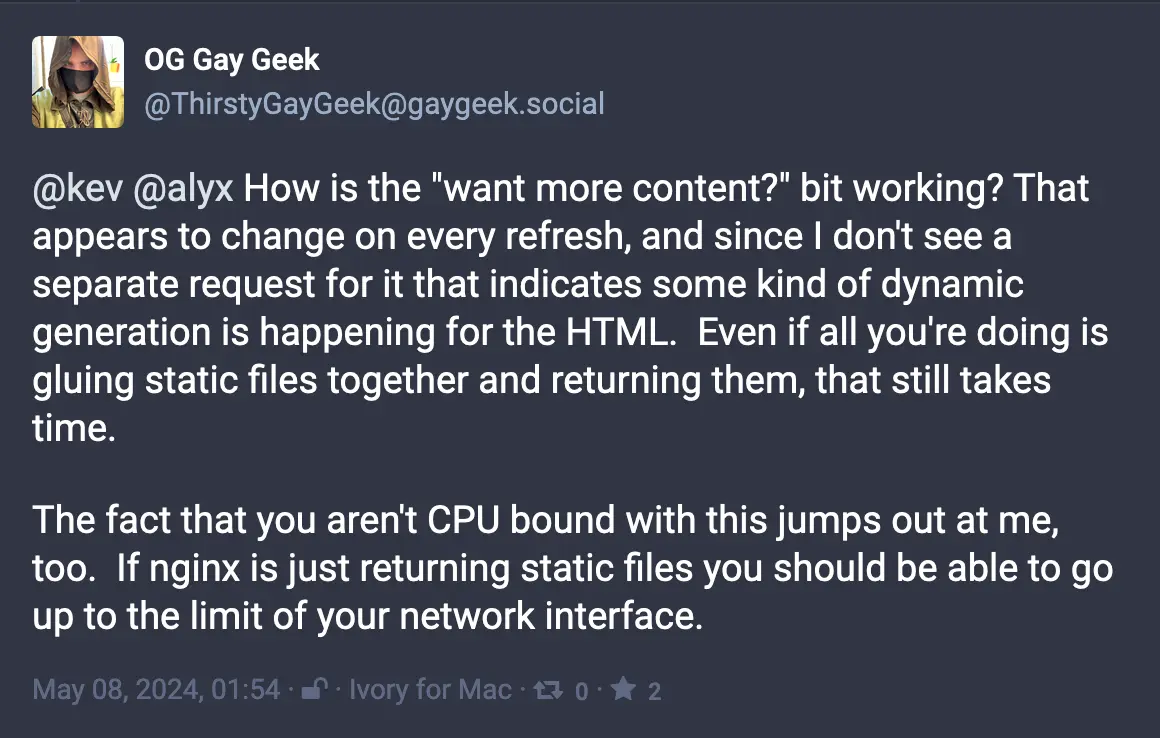

Then I saw this comment on Mastodon:

The “want more content?” thing they’re talking about, was a little box after all posts that displayed links to 3 random posts. This was generated dynamically on every page load so that people would get 3 different links every time. Because of this, caching was not possible.

Eureka! 💡

I changed this little PHP script for a button that allows folk to visit a random post instead and, hey presto! Caching of blog posts started working.

Testing things

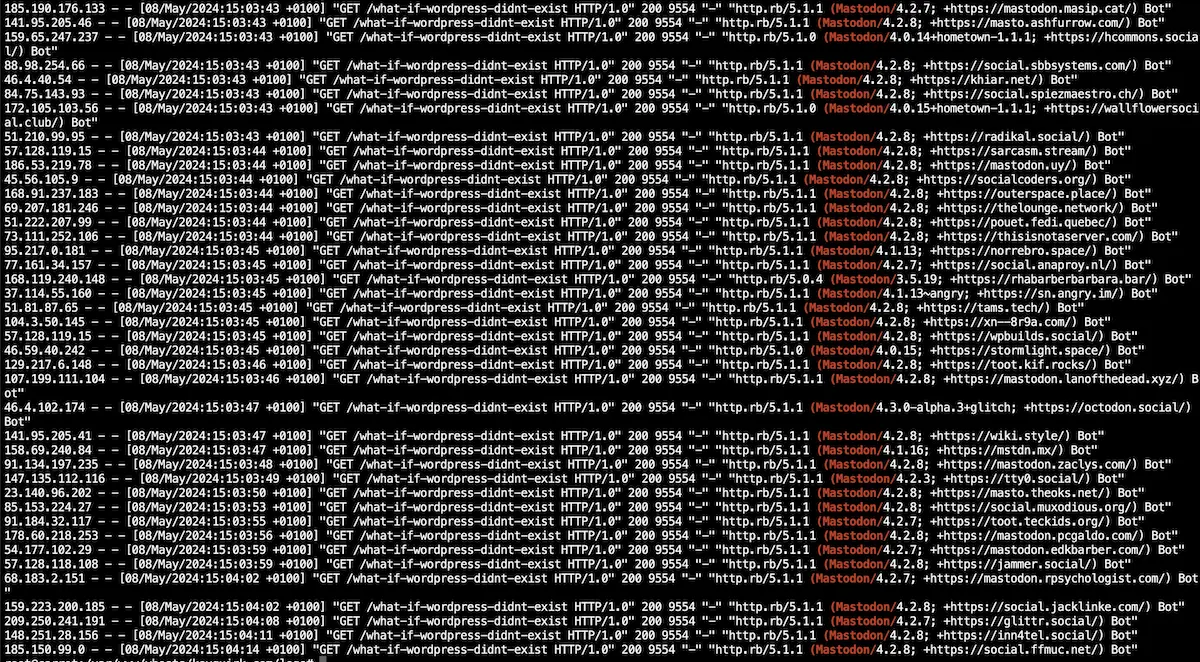

Now I knew Staticache was working correctly, it was time to test it. So I fired out a test link to a random post on my staging site (this was to ensure it wasn’t cached on Mastodon) and I immediately saw the swarm of instances hitting my server:

…and my staging site stayed up. 🎉 🎉

I haven’t tested things on the live site yet, so we will see how things hold up when the link to this post gets sent over to Mastodon.

Final thoughts

There was a number of people who commented on Mastodon (and elsewhere) saying that this is a simple problem to fix on the server side. I don’t think that’s fair. It’s a problem that can be fixed server side, but I wouldn’t say it’s been a simple problem to fix. Especially for someone like me, who isn’t a sysadmin.

I think if you follow some hosting best practices; like avoiding bloat, cache where possible, compress, use a decent server etc. then you should be able to work around the problem.

I still think this is a problem that should be fixed from the Mastodon side, but I’m glad I was able to find a workaround.

Finally, I’d like to thank everyone who commented and provided advice. That’s one of the things I love about the community over on the Fedi - people are, for the most part, willing to help without being a dick about it. So thanks, everyone.

There’s a few little issues I’ve discovered on the site, now that caching is working correctly, but so far I’ve been able to fix those to. If you notice anything that’s broke, please let me know.

In the meantime, let’s get this post published and see if Mastodon takes down my site…

Update: This post just hit Mastodon, I can see the flood in the logs and my site didn’t even blink. Yay!